iPhone X with Face Mapping

On Friday Apple fans were queuing to get their hands on the newly released iPhone X: The flagship smartphone that Apple deemed a big enough update to skip a numeral. RIP iPhone 9.

The shiny new hardware includes a front-facing sensor module housed in the now infamous ‘notch’ which takes an unsightly but necessary bite out of the top of an otherwise (near) edge-to-edge display and thereby enables the smartphone to sense and map depth — including facial features.

So the iPhone X knows it’s your face looking at it and can act accordingly, e.g. by displaying the full content of notifications on the lock screen vs just a generic notice if someone else is looking. So hello contextual computing. And also hey there additional barriers to sharing a device.

Face ID has already generated a lot of excitement but the switch to a facial biometric does raise privacy concerns — given that the human face is naturally an expression-rich medium which, inevitably, communicates a lot of information about its owner without them necessarily realizing it.

You can’t argue that a face tells rather more stories over time than a mere digit can. So it pays to take a closer look at what Apple is (and isn’t doing here) as the iPhone X starts arriving in its first buyers’ hand.

The shiny new hardware includes a front-facing sensor module housed in the now infamous ‘notch’ which takes an unsightly but necessary bite out of the top of an otherwise (near) edge-to-edge display and thereby enables the smartphone to sense and map depth — including facial features.

So the iPhone X knows it’s your face looking at it and can act accordingly, e.g. by displaying the full content of notifications on the lock screen vs just a generic notice if someone else is looking. So hello contextual computing. And also hey there additional barriers to sharing a device.

Face ID has already generated a lot of excitement but the switch to a facial biometric does raise privacy concerns — given that the human face is naturally an expression-rich medium which, inevitably, communicates a lot of information about its owner without them necessarily realizing it.

You can’t argue that a face tells rather more stories over time than a mere digit can. So it pays to take a closer look at what Apple is (and isn’t doing here) as the iPhone X starts arriving in its first buyers’ hand.

Face ID

The core use for the iPhone X’s front-facing sensor module — aka the TrueDepth camera system, as Apple calls it — is to power a new authentication mechanism based on a facial biometric. Apple’s brand name for this is Face ID.

To use Face ID iPhone X owners register their facial biometric by tilting their face in front of the TrueDepth camera. (NB: The full Face ID enrollment process requires two scans so takes a little longer than the below GIF.)

To use Face ID iPhone X owners register their facial biometric by tilting their face in front of the TrueDepth camera. (NB: The full Face ID enrollment process requires two scans so takes a little longer than the below GIF.)

The face biometric system replaces the Touch ID fingerprint biometric which is still in use on other iPhones (including on the new iPhone 8/8 Plus).

Only one face can be enrolled for Face ID per iPhone X — vs multiple fingerprints being allowed for Touch ID. Hence sharing a device being less easy, though you can still share your passcode.

As we’ve covered off in detail before Apple does not have access to the depth-mapped facial blueprints that users enroll when they register for Face ID. A mathematical model of the iPhone X user’s face is encrypted and stored locally on the device in a Secure Enclave.

Face ID also learns over time and some additional mathematical representations of the user’s face may also be created and stored in the Secure Enclave during day to day use — i.e. after a successful unlock — if the system deems them useful to “augment future matching”, as Apple’s white paper on Face ID puts it. This is so Face ID can adapt if you put on glasses, grow a beard, change your hair style, and so on.

The key point here is that Face ID data never leaves the user’s phone (or indeed the Secure Enclave). And any iOS app developers wanting to incorporate Face ID authentication into their apps do not gain access to it either. Rather authentication happens via a dedicated authentication API that only returns a positive or negative response after comparing the input signal with the Face ID data stored in the Secure Enclave.

Senator Al Franken wrote to Apple asking for reassurance on exactly these sorts of question. Apple’s response letter also confirmed that it does not generally retain face images during day-to-day unlocking of the device — beyond the sporadic Face ID augmentations noted above.

“Face images captured during normal unlock operations aren’t saved but are instead immediately discarded once the mathematical representation is calculated for comparison to the enrolled Face ID data,” Apple told Franken.

Apple’s white paper further fleshes out how Face ID functions — noting, for example, that the TrueDepth camera’s dot projector module “projects and reads over 30,000 infrared dots to form a depth map of an attentive face” when someone tries to unlock the iPhone X (the system tracks gaze as well which means the user has to be actively looking at the face of the phone to activate Face ID), as well as grabbing a 2D infrared image (via the module’s infrared camera). This also allows Face ID to function in the dark.

“This data is used to create a sequence of 2D images and depth maps, which are digitally signed and sent to the Secure Enclave,” the white paper continues. “To counter both digital and physical spoofs, the TrueDepth camera randomizes the sequence of 2D images and depth map captures, and projects a device-specific random pattern. A portion of the A11 Bionic processor’s neural engine — protected within the Secure Enclave — transforms this data into a mathematical representation and compares that representation to the enrolled facial data. This enrolled facial data is itself a mathematical representation of your face captured across a variety of poses.”

So as long as you have confidence in the caliber of Apple’s security and engineering, Face ID’s architecture should give you confidence that the core encrypted facial blueprint to unlock your device and authenticate your identity in all sorts of apps is never being shared anywhere.

But Face ID is really just the tip of the tech being enabled by the iPhone X’s TrueDepth camera module.

Only one face can be enrolled for Face ID per iPhone X — vs multiple fingerprints being allowed for Touch ID. Hence sharing a device being less easy, though you can still share your passcode.

As we’ve covered off in detail before Apple does not have access to the depth-mapped facial blueprints that users enroll when they register for Face ID. A mathematical model of the iPhone X user’s face is encrypted and stored locally on the device in a Secure Enclave.

Face ID also learns over time and some additional mathematical representations of the user’s face may also be created and stored in the Secure Enclave during day to day use — i.e. after a successful unlock — if the system deems them useful to “augment future matching”, as Apple’s white paper on Face ID puts it. This is so Face ID can adapt if you put on glasses, grow a beard, change your hair style, and so on.

The key point here is that Face ID data never leaves the user’s phone (or indeed the Secure Enclave). And any iOS app developers wanting to incorporate Face ID authentication into their apps do not gain access to it either. Rather authentication happens via a dedicated authentication API that only returns a positive or negative response after comparing the input signal with the Face ID data stored in the Secure Enclave.

Senator Al Franken wrote to Apple asking for reassurance on exactly these sorts of question. Apple’s response letter also confirmed that it does not generally retain face images during day-to-day unlocking of the device — beyond the sporadic Face ID augmentations noted above.

“Face images captured during normal unlock operations aren’t saved but are instead immediately discarded once the mathematical representation is calculated for comparison to the enrolled Face ID data,” Apple told Franken.

Apple’s white paper further fleshes out how Face ID functions — noting, for example, that the TrueDepth camera’s dot projector module “projects and reads over 30,000 infrared dots to form a depth map of an attentive face” when someone tries to unlock the iPhone X (the system tracks gaze as well which means the user has to be actively looking at the face of the phone to activate Face ID), as well as grabbing a 2D infrared image (via the module’s infrared camera). This also allows Face ID to function in the dark.

“This data is used to create a sequence of 2D images and depth maps, which are digitally signed and sent to the Secure Enclave,” the white paper continues. “To counter both digital and physical spoofs, the TrueDepth camera randomizes the sequence of 2D images and depth map captures, and projects a device-specific random pattern. A portion of the A11 Bionic processor’s neural engine — protected within the Secure Enclave — transforms this data into a mathematical representation and compares that representation to the enrolled facial data. This enrolled facial data is itself a mathematical representation of your face captured across a variety of poses.”

So as long as you have confidence in the caliber of Apple’s security and engineering, Face ID’s architecture should give you confidence that the core encrypted facial blueprint to unlock your device and authenticate your identity in all sorts of apps is never being shared anywhere.

But Face ID is really just the tip of the tech being enabled by the iPhone X’s TrueDepth camera module.

Use ARKit for face tracking:

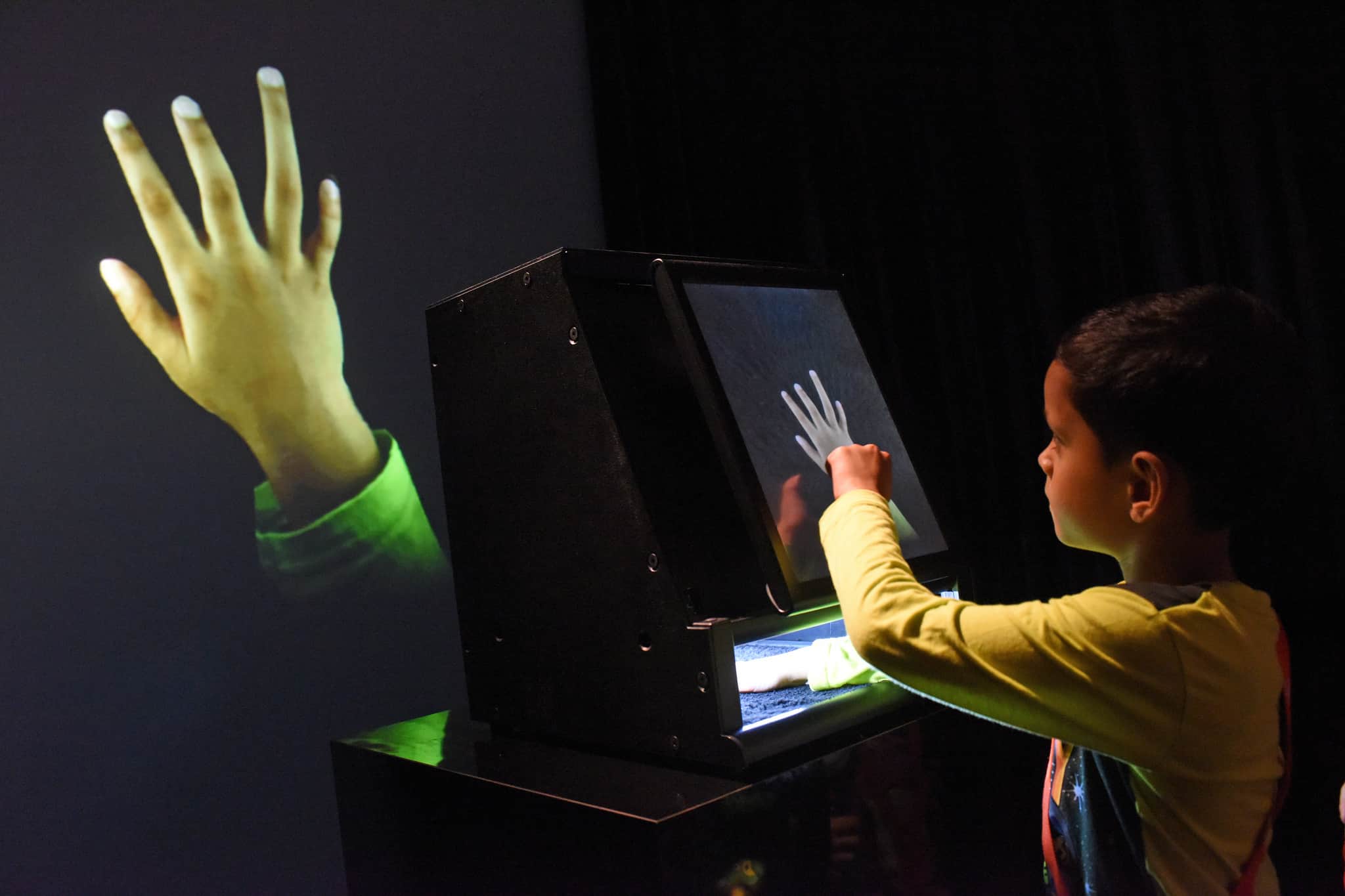

Apple is also intending the depth sensing module to enable flashy and infectious consumer experiences for iPhone X users by enabling developers to track their facial expressions, and especially for face-tracking augmented reality. AR generally being a huge new area of focus for Apple — which revealed its ARKit support framework for developers to build augmented reality apps at its WWDC event this summer.

And while ARKit is not limited to the iPhone X, ARKit for face-tracking via the front-facing camera is. So that’s a big new capability incoming to Apple’s new flagship smartphone.

“ARKit and iPhone X enable a revolutionary capability for robust face tracking in AR apps. See how your app can detect the position, topology, and expression of the user’s face, all with high accuracy and in real time,” writes Apple on its developer website, going on to flag up some potential uses for the API — such as for applying “live selfie effects” or having users’ facial expressions “drive a 3D character”.

The consumer showcase of what’s possible here is, of course, Apple’s new animoji. Aka the animated emoji characters which were demoed on stage when Apple announced the iPhone X and which enable users to virtually wear an emoji character as if was a mask, and then record themselves saying (and facially expressing) something.

So an iPhone X user can automagically ‘put on’ the alien emoji. Or the pig. The Fox. Or indeed the 3D poop.

And while ARKit is not limited to the iPhone X, ARKit for face-tracking via the front-facing camera is. So that’s a big new capability incoming to Apple’s new flagship smartphone.

“ARKit and iPhone X enable a revolutionary capability for robust face tracking in AR apps. See how your app can detect the position, topology, and expression of the user’s face, all with high accuracy and in real time,” writes Apple on its developer website, going on to flag up some potential uses for the API — such as for applying “live selfie effects” or having users’ facial expressions “drive a 3D character”.

The consumer showcase of what’s possible here is, of course, Apple’s new animoji. Aka the animated emoji characters which were demoed on stage when Apple announced the iPhone X and which enable users to virtually wear an emoji character as if was a mask, and then record themselves saying (and facially expressing) something.

So an iPhone X user can automagically ‘put on’ the alien emoji. Or the pig. The Fox. Or indeed the 3D poop.

Watch Tweet on this Click on link:

But again, that’s just the beginning. With the iPhone X developers can access ARKit for face-tracking to power their own face-augmenting experiences — such as the already showcased face-masks in the Snap app.

“This new ability enables robust face detection and positional tracking in six degrees of freedom. Facial expressions are also tracked in real-time, and your apps provided with a fitted triangle mesh and weighted parameters representing over 50 specific muscle movements of the detected face,” writes Apple.

“This new ability enables robust face detection and positional tracking in six degrees of freedom. Facial expressions are also tracked in real-time, and your apps provided with a fitted triangle mesh and weighted parameters representing over 50 specific muscle movements of the detected face,” writes Apple.

Watch Tweet on this Click on link:

(TC Tuc Crunch)

iphone X|launch with the risk of face mapping| Tuc Crunch

Reviewed by Hasnain Gakhar

on

November 05, 2017

Rating:

Reviewed by Hasnain Gakhar

on

November 05, 2017

Rating:

Reviewed by Hasnain Gakhar

on

November 05, 2017

Rating:

Reviewed by Hasnain Gakhar

on

November 05, 2017

Rating:

No comments:

We received your comment and try best to reply you within 24 hours.Thanks for Comment.